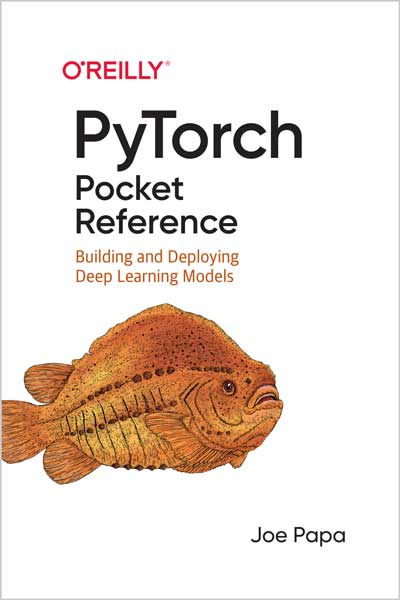

Creating and Deploying Deep Learning Applications

Ian Pointer

#PyTorch

#Deep_Learning

#networks

#Wikipedia

#NLP

Deep learning is changing everything. This machine-learning method has already surpassed traditional computer vision techniques, and the same is happening with NLP. If you're looking to bring deep learning into your domain, this practical book will bring you up to speed on key concepts using Facebook's PyTorch framework.

Once author Ian Pointer helps you set up PyTorch on a cloud-based environment, you'll learn how use the framework to create neural architectures for performing operations on images, sound, text, and other types of data. By the end of the book, you'll be able to create neural networks and train them on multiple types of data.

• Learn how to deploy deep learning models to production- • Explore PyTorch use cases in companies other than Facebook

- • Learn how to apply transfer learning to images

- • Apply cutting-edge NLP techniques using a model trained on Wikipedia

From the Preface

Deep learning’s definition often is more confusing than enlightening. A way of defining it is to say that deep learning is a machine learning technique that uses multiple and numerous layers of nonlinear transforms to progressively extract features from raw input. Which is true, but it doesn’t really help, does it? I prefer to describe it as a technique to solve problems by providing the inputs and desired outputs and letting the computer find the solution, normally using a neural network.

One thing about deep learning that scares off a lot of people is the mathematics. Look at just about any paper in the field and you’ll be subjected to almost impenetrable amounts of notation with Greek letters all over the place, and you’ll likely run screaming for the hills. Here’s the thing: for the most part, you don’t need to be a math genius to use deep learning techniques.

In fact, for most day-to-day basic uses of the technology, you don’t need to know much at all, and to really understand what’s going on (as you’ll see in Chapter 2), you only have to stretch a little to understand concepts that you probably learned in high school. So don’t be too scared about the math.

By the end of Chapter 3, you’ll be able to put together an image classifier that rivals what the best minds in 2015 could offer with just a few lines of code.

PyTorch

This book will introduce you to deep learning via PyTorch, an open source offering from Facebook that facilitates writing deep learning code in Python.

PyTorch has two lineages. First, and perhaps not entirely surprisingly given its name, it derives many features and concepts from Torch, which was a Lua-based neural network library that dates back to 2002. Its other major parent is Chainer, created in Japan in 2015. Chainer was one of the first neural network libraries to offer an eager approach to differentiation instead of defining static graphs, allowing for greater flexibility in the way networks are created, trained, and operated. The combination of the Torch legacy plus the ideas from Chainer has made PyTorch popular over the past couple of years.2

The library also comes with modules that help with manipulating text, images, and audio (torchtext, torchvision, and torchaudio), along with built-in variants of popular architectures such as ResNet (with weights that can be downloaded to provide assistance with techniques like transfer learning, which you’ll see in Chapter 4).

Aside from Facebook, PyTorch has seen quick acceptance by industry, with companies such as Twitter, Salesforce, Uber, and NVIDIA using it in various ways for their deep learning work. As you’ll see in this book, although PyTorch is common in more research-oriented positions, with the advent of PyTorch 1.0, it’s perfectly suited to production use cases.

Editorial Reviews

About the Author

Ian Pointer is a data engineer, specializing in machine learning solutions (including deep learning techniques) for multiple Fortune 100 clients. Ian is currently at Lucidworks, where he works on cutting-edge NLP applications and engineering.